As lawsuits mount and new rules arrive, artificial intelligence is already inside the newsroom. For a small publisher like me, it is both a lifeline and a risk — a tool that can help tell the truth, or quietly hollow it out.

By Carlos Taylhardat | 3 Narratives News | November 23, 2025

Most nights at 3 Narratives News start the same way: a blank WordPress window, a stack of open tabs, and an AI assistant humming quietly in another browser tab.

I use that assistant for the unglamorous parts of journalism: checking dates on court rulings, summarizing dense regulatory texts, sorting through long reports, and helping structure a story that has too many moving parts. The promise is simple: if a one-person newsroom can tap the same kind of tools as a multinational, then maybe the little outlet has a fighting chance.

But while I edit the answers it gives me, another story plays out in the background. Around the world, news organizations and authors are suing AI companies for training on their work without permission. The New York Times is fighting OpenAI and Microsoft over millions of articles allegedly scraped to build chatbots that now compete for readers’ attention. A growing cluster of authors and publishers have brought similar cases against OpenAI, Microsoft and Meta, many of them now consolidated in a single federal court in New York.

In Europe, the EU’s new AI Act introduces transparency rules that will force the largest AI models to reveal more about the data they were trained on and to respect copyright opt-outs. In the United States, a landmark settlement with Anthropic will pay authors roughly $1.5 billion for pirated books used in an early training corpus, even as the court ruled that training on lawfully obtained books could be fair use.

In other words, the tool I lean on to survive as a publisher sits squarely in the middle of a global fight over creative rights.

So this is an inside look at that contradiction through the three narratives we use at 3 Narratives News. One sees AI as a superpower for small newsrooms. Another sees it as an engine of misinformation and extraction. The third asks what an ordinary reader can do in 2026 to tell the difference.

Context: How AI Walked Into the Newsroom

Until very recently, “automation” in journalism meant things like print-layout software, traffic dashboards, or tools for moving copy between desks. Generative AI changed the conversation. Suddenly, the same kind of model that can draft a poem can also draft a news story.

Publishers have moved faster than many readers realize. Surveys of news executives in 2023 and 2024 found that more than half of publishers were already using AI for back-end automation and content recommendations, with roughly a quarter experimenting with AI-assisted content creation under human oversight. An Associated Press–supported study described newsroom use of generative AI as widespread but uneven, with ethical safeguards lagging behind experimentation.

At the same time, big media brands are building their own AI products. TIME, for example, launched an AI-powered audio briefing that turns its newsletter into a short dialogue between synthetic voices trained to stay within the bounds of TIME’s verified reporting.

Outside the newsroom, the stakes are even higher. Analysts warn that if AI systems can generate real-time news summaries and “good enough” answers without sending readers back to the original outlets, they could strip publishers of both traffic and revenue, just as social media did a decade ago — only faster.

Meanwhile, public trust is under pressure from another direction: deepfakes, synthetic audio, and AI-generated misinformation. Reports from media-trust projects in 2025 show that many news consumers already assume publishers are using AI more than they admit, and worry that it could quietly replace human judgment.

At 3 Narratives News, we’ve tried to walk directly into that tension. Our promise is simple and printed on our site: Two Sides. One Story. You Make the Third. Our AI-use page spells out how we use tools like this one — and what we don’t let them do. We also built a story about legacy media, alternative media and the case for “two truths” to explain why we think transparency about process matters as much as the headline.

With that context, here are the three narratives around AI in the newsroom, told from the inside.

Narrative 1: AI as the Small Publisher’s Superpower

In this version of the story, AI is the thing that lets a tiny newsroom punch above its weight.

Imagine you are running a one-person outfit that covers geopolitics, climate, elections and technology often in the same week. The reading alone can swallow your day: court filings, official reports, policy papers, and long investigations from bigger outlets. For much of modern journalism, the real bottleneck is not writing; it is processing information fast enough to tell a coherent story.

This is where AI feels like a miracle.

Instead of spending an entire afternoon extracting key dates from a 200-page court decision, you can ask an AI assistant to summarize the timeline, then go back to the original document to verify and add texture. Instead of struggling to structure a complex story, say, Trump’s 28-point Ukraine peace proposal or the tangled family tree of the “Epstein files” you can use AI as a sounding board for possible outlines, then choose the one that best matches your judgment and your readers’ needs.

Used this way, AI is not a ghostwriter. It is more like a very fast, easily distracted intern who is good at summarizing, bad at nuance, and needs constant supervision ( lol, I know this too well ).

For small publishers, there is also a financial argument. If AI can take over tasks like transcribing interviews, generating clean HTML, or creating first-draft image briefs, then more of the limited time and budget can go to the distinctly human work: interviewing, verifying, and writing in a voice that feels lived rather than generic.

The innovation narrative extends beyond the newsroom, too. When an outlet like TIME turns its newsletter into an AI-generated conversation between two characters built on top of its verified reporting, it is not replacing journalism; it is changing the format of how that journalism is delivered, from text into a kind of audio theatre. For publishers with aging or busy audiences, formats like that may be the difference between being read and being ignored.

In this worldview, AI is a democratizing force. It gives a small operation in Vancouver or Lagos the tools that used to be available only to giants in New York or London. It can help a new outlet like 3 Narratives News produce deeply structured pieces on Ukraine, Venezuela or Jeffrey Epstein’s emails with a level of polish and speed that would have been unthinkable five years ago. A small publisher who doesn’t need political sponsors to survive can now tell a story based on the story without an agenda.

The key assumption here is that humans stay in charge. Editors set standards. Reporters choose sources. AI does the heavy lifting in the background, but never gets the final word.

Narrative 2: AI as an Engine of Misinformation and Extraction

Now turn the same tools around and look from another angle. In this narrative, AI in the newsroom is less a superpower and more a loaded weapon lying on the table.

Start with the training data. A wave of lawsuits from authors, artists, music rights organizations and publishers accuses major AI companies of building their models on pirated or unlicensed content from books, or shadow libraries, news articles paywalled behind subscriptions, and song lyrics protected by law. When a system trained on that material generates fluent summaries and explanations, it competes directly with the very outlets that produced the underlying work.

From this point of view, the settlement that pays authors for pirated books is not a sign of progress. It is evidence that AI companies made a calculated gamble: copy first, negotiate later, and treat any legal penalties as the cost of doing business.

Then there is accuracy. Generative AI systems are notorious for “hallucinations” with confident answers built on false or fabricated details ( lol, I am also used to this ). In a lab setting, this is a technical bug. In a newsroom, it is a potential libel suit or a piece of misinformation spreading at machine speed.

Early newsroom experiments with fully AI-generated articles have not gone well. Large outlets that quietly published unlabelled AI stories were caught when readers spotted factual errors and generic phrasing; corrections and apologies followed. Reports from media analysts warn that, without clear guardrails, AI could accelerate a familiar pattern: platforms and aggregators pulling value away from original reporting while leaving publishers to absorb the reputational risk.

The power imbalance is not only legal or technical. It is economic. A handful of AI companies, backed by some of the richest firms in history, now sit between publishers and readers. If audiences increasingly consume “news” through AI assistants that summarize and remix original reporting without sending traffic back, publishers may find themselves in a position that one analysis described starkly as

“doom for the Fourth Estate.”.

Finally, there is the human cost inside the newsroom. When managers see a tool that can produce acceptable copy quickly, the temptation to cut staff is real. Some newsrooms are already experimenting with using AI for routine sports recaps, earnings reports or real estate listings. For journalists who have seen wave after wave of layoffs, the fear is straightforward: that the next cost-cutting memo will quietly use AI as justification.

In this narrative, AI is not primarily a tool for truth. It is a continuation of the same extraction logic that has hollowed out local news for years, only now the extraction is happening not just to advertising revenue and classified listings, but to the creative work itself.

How Readers Can Tell Who to Trust

Beneath both narratives sits a quieter, more practical question: as a reader in 2026, how do you know who to trust?

You cannot see the training data behind every AI system. You cannot personally audit every newsroom’s workflow. What you can see are signals and habits that either invite trust or erode it.

Here are some of the signals I watch for, as both a publisher and a reader:

- Real names and real accountability. Does the article have a specific byline (“By Jane Doe”), and does that name link to an author page with a biography, photo and areas of expertise? Or is it “By Staff” and nothing more? At 3 Narratives News, our SOP requires a real human byline on every story, linked to an author page, precisely because readers deserve someone to hold accountable.

- Clear AI-use disclosures. Does the publication explain, in plain language, how it uses AI? Is there a page like our How We Use AI policy that you can read, or is the topic treated as a trade secret?

- Corrections and humility. When an error is found, does the outlet correct it transparently and link to a corrections page? AI tools can make mistakes at scale; a newsroom that admits and fixes errors is safer than one that insists it is always right.

- Depth and specificity. AI is good at surface-level prose and pattern-matching. It is less convincing when it comes to very specific, lived detail: the shape of a hallway, the name of a local organizer, the texture of an argument between two real people. Stories that include those kinds of grounded specifics are harder to fake.

- Links you can follow. Trusted outlets show their homework. They link out to court documents, official reports, and other coverage so you can see the source material. When we wrote about the “Epstein files” and later about Epstein’s emails, we deliberately pointed readers toward primary documents and multiple outlets, not just our own summary.

The silent story here is not about AI models or copyright clauses. It is about whether readers are treated as partners in the search for truth or as passive consumers of whatever the algorithm serves up next.

If there is a hopeful note, it is this: the same audience research that shows anxiety about AI also shows a clear desire for transparency. People say they want to know when AI is used, how it is supervised, and who is responsible when things go wrong.

That gives small publishers like me a simple, hard job: to use these tools out in the open, to say when they helped and when they failed, and to keep the final decisions human. AI can help us handle the flood of information. It cannot carry the weight of trust.

Key Takeaways

- Generative AI is already embedded in many newsrooms, helping with tasks from transcription and summarization to recommendation engines and experimental content formats.

- At the same time, major lawsuits and new regulations highlight deep conflicts over how AI models use copyrighted books, articles, music and images without clear permission.

- For small publishers, AI can be a “superpower” that makes serious reporting possible on limited budgets — if humans stay firmly in charge of verification and voice.

- Critics warn that AI can accelerate misinformation, undermine authors’ rights and strip traffic and revenue away from the outlets that produce original reporting.

- Readers in 2026 can protect themselves by looking for real bylines, clear AI-use disclosures, transparent corrections and visible links to underlying sources.

Questions This Article Answers

- How are newsrooms actually using AI today?

Many newsrooms use AI behind the scenes for tasks like transcription, translation, headline testing, and content recommendations. A growing number also experiment with AI-assisted writing under human oversight and with new formats such as AI-generated audio briefings based on verified reporting. - Why are authors, artists and publishers suing AI companies?

Plaintiffs argue that some AI models were trained on copyrighted books, news articles, images and music obtained without permission, including pirated copies, and that these systems now generate content that competes with their work. Recent settlements and rulings have focused both on how training data was acquired and on whether training on lawfully obtained copyrighted material can be considered fair use. - What is the EU AI Act and why does it matter for creative rights?

The EU AI Act is a new European law that sets rules for AI systems, including transparency obligations for powerful general-purpose models. Among other things, it requires AI providers to respect copyright opt-outs and to publish summaries of the training data they use, giving authors and publishers more visibility into how their work may have been used. - Can AI replace human journalists?

AI can quickly summarize, rewrite and synthesize information, but it lacks lived experience, moral judgement and accountability. It cannot conduct a sensitive interview, make ethical calls in dangerous situations, or take responsibility for errors. Most serious newsrooms treat AI as a tool that supports journalists, not a replacement for them. - How can I tell if a publication is using AI responsibly?

Look for clear bylines linked to real author pages, public AI-use and corrections policies, specific details that reflect on-the-ground reporting, and links to primary documents. Outlets that talk openly about their workflow and correct mistakes transparently are more likely to be using AI as a tool for truth rather than as a shortcut or a disguise.

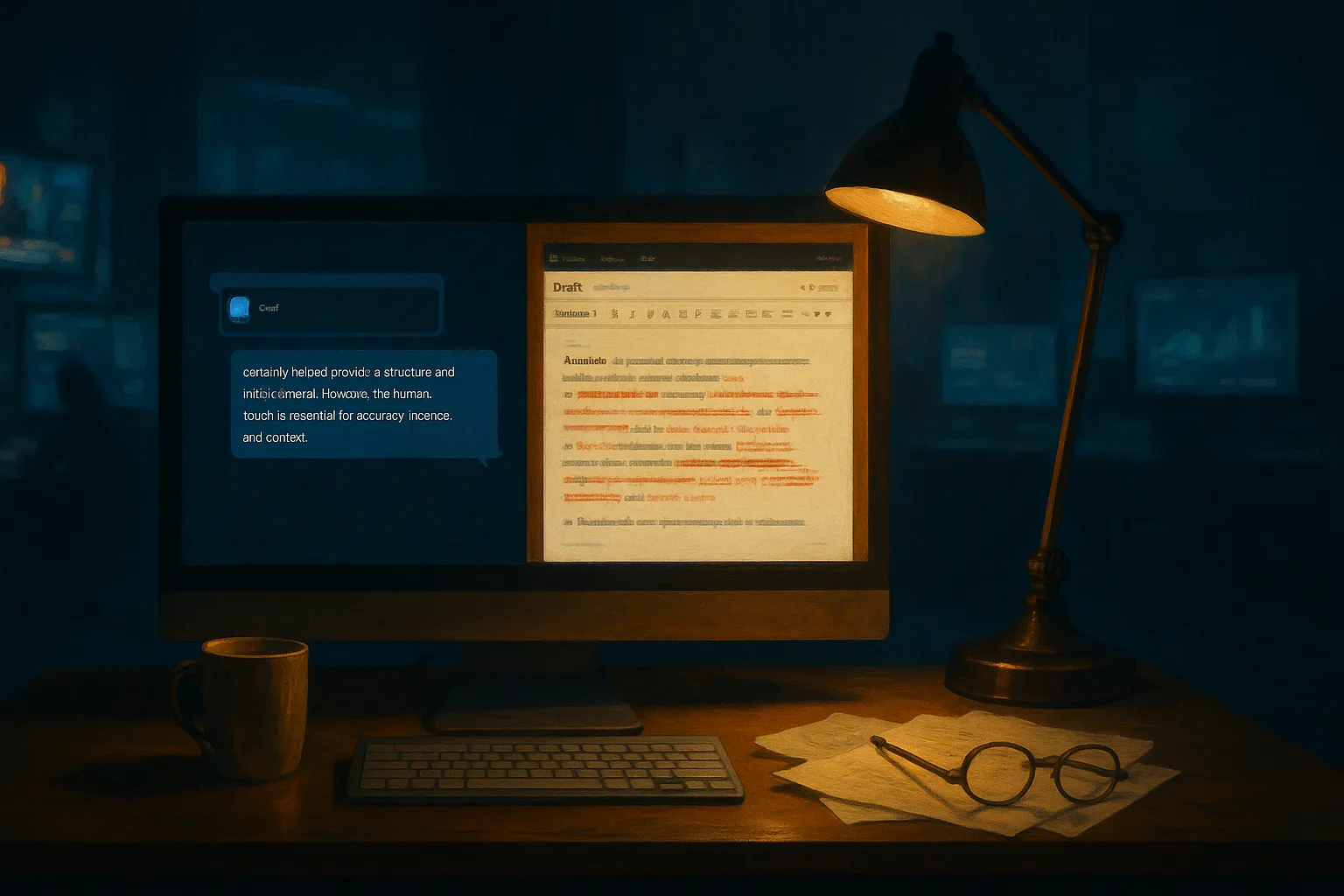

Alt text: “A journalist’s desk with an AI chat window and an edited article side by side on a computer screen, symbolising the tension between automation and human judgement in the newsroom.”

Process & AI-Use Disclosure: This article was reported and edited by a human journalist, with AI tools used for background research, document review and first-draft assistance. All quotes, facts and conclusions were reviewed and approved by a human editor before publication. For more, see How We Use AI and our Corrections page.